Revolutionizing business through the application of Large Language Models (LLMs)

Introduction

Large Language Models (LLMs) are at the forefront of the rapidly evolving field of artificial intelligence (AI), setting the stage for a new era in process automation and introducing the concept of automated virtual assistants. This article delves into the various applications of LLMs, with particular emphasis on their transformative role in enhancing efficiency, decision making, and task execution in different automated processes. Summarizing these insights highlights the pivotal role of LLMs in advancing virtual assistants and process automation while outlining current applications and potential future uses, including the need to continuously improve these models for comprehensive and objective functionality. Through this exploration, we aim to lay the groundwork for a fundamental grasp of the capabilities and challenges of LLMs, paving the way for their most effective application in automating complex tasks and redefining the boundaries of AI and its applications in the modern world.

1. Methodology for building LLM-based autonomous agents

An overview of the development and application of LLM-based autonomous agents is provided below.

The two main factors in the building of LLM-based autonomous agents are as follows:

1.1. Agent architecture design

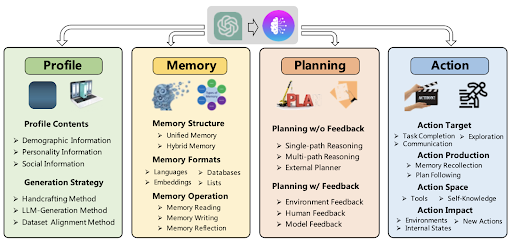

Figure 1: A unified framework for the architecture design of LLM-based autonomous agents

The architecture design focuses on maximizing the capabilities of the LLM, incorporating modules like profile, memory, planning, and action, as shown in Figure 1. The profile module defines the agent’s role, while the memory and planning modules place the agent in a dynamic environment, enabling it to recall past behaviors and plan future actions. The action module translates decisions into specific outputs.

1.2. Agent’s capability acquisition

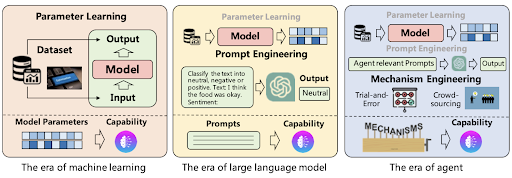

Figure 2: An illustration of the strategy changes to obtain model capabilities.

The goal of capability acquisition focuses on allowing the agent to complete specific tasks, including techniques such as fine-tunning and prompt engineering. Fine-tuning enhances an agent’s performance on specific tasks through data sets, while prompt engineering involves designing prompts to elicit desired behaviors.

Acquisition with LLM fine-tuning uses “Human-annotated datasets”, “LLM generated datasets”, or “Real-world datasets”. However, this technique can be complex, expensive, and time-consuming as it takes a lot of work to build the dataset. For example, to create a Human-annotated dataset, in addition to designing the annotation tasks, workers are the primary resource required to complete it. Additionally, using a different LLM to create a dataset can be costly, particularly if the model requires large sample sizes.

However, file-tuning LLMs is not the only option in the LLM era. Model capabilities can be achieved without fine-tuning by “designing sophisticated prompts,” which engineers can quickly implement, and by “designing appropriate agent evolution mechanisms,” which mechanism engineers can complete.

- Prompt Engineering is a new strategy that leverages natural language to influence LLM actions. By carefully crafting prompts, users can guide LLMs to demonstrate specific abilities, such as complex task reasoning or self-awareness. For example: Chain of Thought (CoT) provides intermediate reasoning steps in the form of brief examples to support LLMs, while Retroformer uses prompts to guide agents in retrospective reflection of past failures to improve future actions.

- Mechanism Engineering differs from fine-tuning and prompt engineering in that it focuses on designing unique mechanisms to enhance the agent’s capabilities. This strategy involves developing specialized modules, introducing new working rules, or incorporating mechanisms such as trial and error, crowdsourcing, or experience accumulation to enable agents to function more efficiently and adaptably.

When comparing strategies to acquire capabilities in the LLM, fine-tuning adjusts model parameters to incorporate in-depth knowledge of the specific task; nevertheless, this technique is mostly suitable for open-source LLMs. On the other hand, prompt engineering and mechanism engineering enhance LLM capabilities through strategic or specialized prompt mechanisms, which can work for both open-source and closed-source LLMs. However, due to the LLM’s limited context window, these techniques are unable to handle extended task information, and the large design space of prompts and mechanisms makes it challenging to identify the best solution.

2. LLM applications

The key takeaway from this analysis is the emphasis on the LLM’s human-like capabilities. These models are not merely tools to perform predefined tasks; they are evolving entities capable of learning from their environment and making decisions that resemble human reasoning.

It is clear that LLMs have huge potential to change the landscape of virtual assistants and process automation. Their ability to understand complicated instructions, reason on their own, and effectively perform multi-step tasks makes them key players in the future of automation. However, there are still a number of challenges that remain important, such as ensuring unbiased behavior, fine-tuning training data, and optimizing performance in different environments to advance future research and development.

As we move forward, enhancing the sustainability and adaptability of these models should be our main priorities. This includes expanding their application across different domains and tasks, continuously improving their accuracy and efficiency, and addressing any limitations related to training data and other potential biases.

Integrating LLMs into process automation and making them a core component of virtual assistants are significant steps towards more intelligent, flexible and efficient systems. These systems are capable of automatically handling complex tasks, opening up many possibilities for future applications and continuously improving AI capabilities. From here, the journey includes not only technological advancements but also a deeper understanding of the ethical and practical implications of deploying these advanced models in real-life situations.

2.1. Intelligent virtual assistant with LLM-based process automation

Intelligent virtual assistants such as Siri, Alexa, and Google Assistant have become an indispensable part of daily life. However, they still struggle with complex or multi-step tasks. With the rapid development of LLMs, it is expected that these models will enhance the capabilities of virtual assistants by better understanding natural language, utilizing reasoning, and arranging activity sequences. LLM’s improved cause-and-effect reasoning can help assistants interpret ambiguous instructions, decode complex goals, and complete sequential tasks automatically.

Below is a new LLM-based process automation (LLMPA) virtual assistant system. The system works in mobile applications based on high-level user requests. The system differs from other assistants by simulating detailed human interactions, allowing it to perform more complex multi-stage processes based on natural language. Unlike current assistants that rely on simple predefined behaviors, an LLMPA agent can simulate specific operations such as clicking, scrolling, and typing, enabling it to handle complex goals such as free-form instructions and creative problem solving.

The LLMPA system includes modules for decomposing instructions, generating descriptions, detecting interface elements, predicting future actions, and error checking. The system was successfully demonstrated to navigate the app to order coffee based on high-level instructions using the mobile payment app Alipay. This showcases how LLMs can enable mobile assistants to automatically handle complex tasks using natural language and environmental context.

The main contributions are a new LLMPA architecture, a methodology for applying LLM-based assistants to mobile applications, and demonstrations of multi-step task completion in a real-world environment. This work represents the first real-world deployment and extensive evaluation of a LLM-based virtual assistant in a widely used mobile application, marking a major milestone in the transition of LLM research to practical applications with significant impact. The system’s successful implementation in Alipay serves as a testament to the advancements that a modern LLM can make, demonstrating how assistants can parse instructions, reason about goals, and execute tasks reliably to support millions of users.

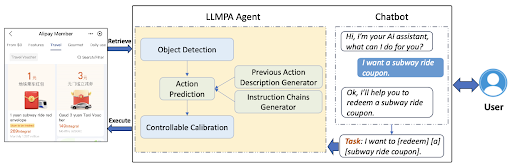

Figure 3: The architecture of Intelligent virtual assistants includes LLMPA Agent and Chatbot.

As shown in Figure 3, the proposed Intelligent virtual assistant system requires the user to communicate with the chatbot to outline the goal, while the LLMPA Agent works with the application to complete the tasks. The chatbot includes a multi-turn dialogue module and an intent extraction module. This setup enables the system to understand user requests and generate appropriate task descriptions; nevertheless, it is not the key focus of this article.

The LLMPA agent is responsible for understanding the task, decoding it, and executing it methodically. It includes the following modules:

- Instruction Chains Generator: This module breaks down tasks into detailed step descriptions.

- Previous Action Description Generator: Based on previous actions and page content, this module generates an easy-to-understand description of the action.

- Object Detection: This is an object detection model used to detect sections on the page. Text within each section is categorized into groups, which creates a distinct hierarchy and improves contextual comprehension.

- Action Prediction: Based on the output of previous modules, this module creates prompts to directly predict the next action.

- Controllable Calibration: This module addresses the hallucinations phenomenon in LLMs by carefully examining the predicted action to make sure it is feasible.

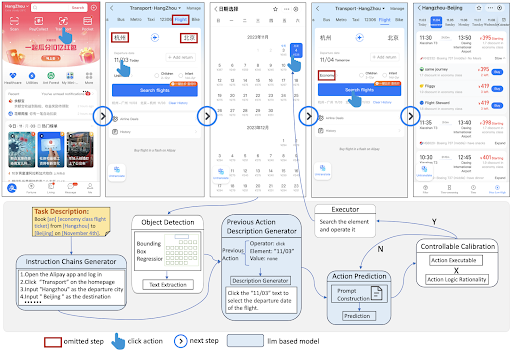

Figure 4: LLMPA agent pipeline for Alipay.

Based on LLMs, the proposed virtual assistant system (Figure 4) excels at parsing complex instructions, reasoning about goals, and automatically executing tasks in sequences. The main advantage of this system lies in its enhanced natural language processing and reasoning capabilities. By leveraging extensive training data, the system can understand ambiguous or incomplete instructions, infer user intent, and support task completion in a multi-step process.

However, the system is limited by factors such as training data constraints and possible biases or behavioural errors. Additionally, large language models are resource-intensive, which can pose challenges for mobile deployments.

The author introduced a creative approach to intelligent virtual assistants using LLMs designed specifically for mobile application automation. The researchers proposed an end-to-end architecture, consisting of the LLMPA model, environment context, and an executor that enabled automated multi-step task completion in real-world payment applications by using natural language instructions. Testing at a large scale in the widely used Alipay platform demonstrated that modern LLMs can power assistants capable of understanding goals, planning, and accomplishing intricate real-world procedures to assist users. This marks a significant advancement for intelligent assistants in popular mobile applications. The research highlights the future potential for creating virtual agents through the development of contextual processing, inference capabilities, and optimized deployment on devices.

2.2. LLMs in business process management tasks

The goal of Business Process Management (BPM) is to understand and manage work execution within an organization to ensure consistency in outputs and identify opportunities for improvement. BPM uses diverse sources of information, from structured process models to unstructured textual documents. In the past, BPM researchers have increasingly turned to Natural Language Processing (NLP) techniques to automatically extract process-related information from the textual data.

Many existing approaches utilize textual data for a wide range of BPM tasks, such as mining process models from descriptions, classifying end-user feedback, and identifying suitable tasks for robotic process automation (RPA). Although a few approaches also incorporate machine learning methods, the majority rely on extensive rule sets. However, each approach is typically designed for a specific task, and a versatile general-purpose model that comprehends process-related text does not yet exist.

With the emergence of pre-trained LLMs that demonstrate strong inference capabilities across domains, researchers are investigating their potential in BPM, including analyzing LLM opportunities and challenges that exist in the BPM lifecycle. Recent publications highlight the potential and difficulties of LLMs but have not introduced specific applications.

This article takes an application-oriented approach by evaluating whether an LLM can accomplish three BPM tasks: (1) mining imperative process models, (2) mining declarative process models, and (3) assessing the suitability of process tasks for RPA. These tasks were selected because they are practically relevant and have previously been addressed in research. The article compares LLMs to existing solutions developed for each task and discusses implications for future research and how a LLM can support practical usage.

The researchers developed and applied an approach using GPT-4, a LLM for diverse BPM tasks. This is a simple approach that enables GPT-4’s task-completion capabilities by giving it precise instructions. The researchers focused on three BPM tasks to demonstrate the effectiveness of GPT-4: mining declarative and imperative process models from textual descriptions, and assessing the suitability of process tasks for RPA. In most cases, GPT-4 performs similarly to or better than the benchmarks, which include specific applications for the respective tasks.

The main benefit of this approach is GPT-4’s flexibility and strong performance across a variety of BPM tasks. The researchers found that the outputs were relatively consistent even with different executions of the same prompt, although some prompts benefited from the inclusion of examples to support the LLMs. The study also analyzed the robustness of inputs and outputs, suggesting that future research should explore whether LLMs can be applied to other BPM lifecycle tasks. Overall, this article illustrates practical applications of GPT-4, emphasizes its effectiveness and robustness, and offers implications for research and future practical applications.

3. An experimental model at FIS for contract review

Based on the comprehensive literature review mentioned above, which delves into the capabilities and limitations of the LLMs in BPM, we have identified a promising research direction and developed an innovative virtual assistant. This assistant leverages LLMs to enhance process automation in companies. Our intelligent virtual assistant is designed to understand, reason, and perform complex tasks based on natural language guidance, which can help to streamline operations and improve efficiency. Our solution, which integrates advanced LLM technology, demonstrates the potential for AI-driven process automation in organizational environments, particularly in legal processes where business contracts are automatically extracted and reviewed by LLMs before authorized employees sign them.

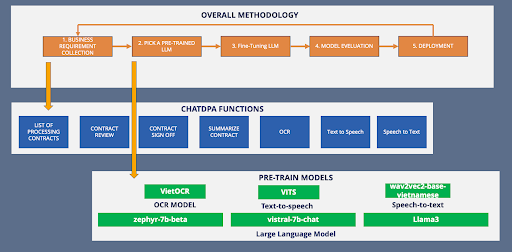

Figure 5: An overview of FIS CHATDPA’s structure to meet business needs.

Research starts with the actual needs of any company, especially the legal team. The primary responsibility of the legal team is to review contracts and look for any ambiguous or hidden clauses. Legal teams may face the following difficulties while reviewing paperwork and contracts without automating the LLM-based process:

- Time-consuming: Manual contract review may require a significant amount of time and effort, especially when involving lengthy or complex documents. Legal teams often need to read through documents carefully to identify key terms, clauses, and potential issues.

- Error-prone: Human judgment is prone to error, especially when under time pressure. Ignoring important details or small differences could result in missed opportunities or unanticipated legal consequences.

- Inconsistent assessment standards: Different team members may interpret or analyze contract terms differently, leading to inconsistencies in assessments. This inconsistency can lead to different assessments in terms of risk or compliance.

The architecture provided in Figure 5 demonstrates an innovative approach to enhancing a legal team’s workflow using advanced AI technologies. The methodology, which is highlighted in the “General Methodology” section, shows how to use LLMs and related technologies in a structured manner to process legal documents effectively. This approach includes five main steps, starting from gathering business requirements, selecting a pre-trained LLM, fine-tuning the model, assessing its performance, and implementing the solution.

As can be seen in the “ChatDPA Functions” section, the architecture incorporates a number of functional components, including contract review, contract summarization, OCR (Optical Character Recognition), and Text to Speech. These components directly address the core needs of legal teams, who frequently handle large volumes of contracts and paperwork.

By integrating pre-trained models such as VietOCR, VITS, wav2vec2, and LLMs such as zephyr-7b-beta, vistrala-7b-chat and Llama3, this architecture provides a powerful set of tools for automating and streamlining a range of legal processes. OCR technology facilitates text extraction from scanned documents, while text-to-speech and speech-to-text functions enhance user engagement and accessibility.

3.1. Key benefits

- Increase efficiency: The structured approach and automated functions significantly reduce the time and effort required for contract review and management, allowing legal teams to focus on higher-level tasks.

- Improve accuracy: By using advanced LLM and OCR technology, the architecture minimizes the risk of human mistakes in the processing and interpretation of legal documents.

- Streamline workflow: From drafting documents to signing contracts, integrated functions comply with standard legal processes, improving productivity and reducing bottlenecks.

- Accessibility: Text-to-speech and speech-to-text functions increase accessibility and assist legal professionals who might need or prefer audio-based engagement.

This architecture provides a comprehensive solution tailored to the demanding needs of legal teams, which combines advanced AI capabilities with real-world workflow improvements.

- Document volume: It might be challenging for legal teams to thoroughly assess every contract without automated assistance because they frequently have to handle enormous amounts of paperwork. Teams may become overwhelmed by this volume, which could cause delays or errors.

- Complex language: Legal documents are often written in complex language or legal jargon, making them difficult to interpret accurately without specialized knowledge. This complexity can make review and analysis less efficient.

- Repetition: Contract review involves repetitive tasks, such as cross-referencing and standard clause checking. Over time, this repetition may cause weariness and decreased accuracy, which will lower the quality of the assessment.

- Difficulty in detecting anomalies: Identifying unusual or non-standard clauses in a contract requires attention to detail. Without LLM-based automation, detecting these anomalies can be difficult and may require more time and effort.

- Lack of standardization: Different contracts may follow different formats or structures, making it more difficult for legal teams to establish a standardized review process. This lack of standardization raises the possibility of missing important details and may lead to inefficiency.

ChatDPA’s architecture demonstrates its potential beyond contract review, positioning it as an ideal automated processing agent powered by LLMs for various tasks in the banking and finance industries. With its powerful functions, including text-to-speech, natural language processing, and optical character recognition (OCR), ChatDPA is a great choice for complex tasks such as customer onboarding, loan application processing, and financial transaction monitoring.

3.2. Some applications in the finance/banking industries

Customer support: ChatDPA can automatically guide new customers through the onboarding process, from account creation to document verification, using advanced natural language processing to clarify customer queries and automatically fill out forms.

Loan processing support: By leveraging the document processing capabilities powered by LLMs, ChatDPA can review and summarize loan applications, perform credit checks, and even suggest suitable loan products, which helps streamline the entire loan approval process.

Transaction monitoring: ChatDPA’s capability to analyze patterns and detect anomalies makes it a great tool for monitoring financial transactions to detect fraud or suspicious activity, strengthening bank security standards.

4. Conclusion

In the current dynamic technological environment, autonomous agents have become prominent, especially in sectors like finance and banking, where efficiency and accuracy are crucial. These agents, powered by advanced AI models such as LLMs, are revolutionizing the way businesses operate, driving automation across a wide range of complex processes. These systems are appealing because they can reduce labor costs and boost productivity by automating operations, processing large volumes of data, and adapting to changing circumstances.

The need for autonomous agents is driven by the increased complexity of business operations and the growing need for solutions that can handle complex tasks with minimal human intervention. These agents, for instance, can streamline processes such as customer onboarding, loan application processing, and transaction monitoring in financial institutions while maintaining a high level of accuracy and consistency. The application of AI-powered autonomous agents extends beyond the financial sector, and impacts many different industries that benefit from automation and intelligent decision-making.

| Exclusive article by FPT IS expertAuthor Le Khac De – Director of Data Platform & Analytics Center, FPT IS |